There is no area untouched by data science and computer science. From medicine, to the criminal justice system, to banking & insurance, to social welfare, data driven solutions and automated systems are proposed and developed for various social problems. The fact that computer science is intersecting with various social, cultural and political spheres means leaving the realm of the “purely technical” and dealing with human culture, values, meaning, and questions of morality; questions that need more than technical “solutions”, if they can be solved at all. Critical engagement and ethics are, therefore, imperative to the growing field of computer science.

There is no area untouched by data science and computer science. From medicine, to the criminal justice system, to banking & insurance, to social welfare, data driven solutions and automated systems are proposed and developed for various social problems. The fact that computer science is intersecting with various social, cultural and political spheres means leaving the realm of the “purely technical” and dealing with human culture, values, meaning, and questions of morality; questions that need more than technical “solutions”, if they can be solved at all. Critical engagement and ethics are, therefore, imperative to the growing field of computer science.

And the need for ethical and critical engagement is becoming more apparent as not a day goes by without a headline about some catastrophic consequences of careless practices, be it a discriminatory automated hiring system or implementation of a facial recognition system that undermines privacy. With the realization that the subjects of enquiry of data science delve deep into the social, cultural and political, has come the attempt to integrate (well, to at least include) ethics as part of computer science modules (e.g., at Harvard and MIT). The central players in the tech world (e.g., DeepMind) also seem to be moving in the direction of taking ethics seriously.

However, even when the need to integrate ethics and critical thinking into computer science is acknowledged, there are no established frameworks, standards or consensus on how it ought to be done, which is not surprising given that the practice is at its early stages. Often times, the idea of ethics is seen as something that can be added to existing systems (as opposed to a critical and analytical approach that goes beyond and questions current underlying assumptions), or as some sort of checklist (as opposed to an aspiration that students need to adopt as part of normal professional data science practice beyond satisfying module requirements) with a list of dos and don’ts that can be consulted… and ta da! You have ethics!

In this blog, we share our approach to integrating critical thinking and ethics to data science as part of the Data Science in Practice module in UCD, CS. The central aspiration of this class is to stimulate students to think critically, to question taken for granted assumptions and to open various questions for discussion. Central to this is the idea of viewing critical thinking and ethics as an important aspect of data scientific practice rather than a list of dos and don’ts that can be taught in class. To see irresponsible and unethical outcomes and practices as things that affect us as individual citizens and shape society for the worst.

The class does not teach some set ethical foundations that need to be followed or ethical and unethical ways of doing data science. Rather, we present various ethical issues as open questions for discussion and the class is given current tools and automated systems PredPol, for example, and are asked to point out possible issues. The class, therefore, is extremely interactive throughout.

The structure of the module (Data Science in Practice) is that students work in pairs on data science projects of their choice. Depending on the type of question the students choose to tackle, some projects require extensive critical and ethical reflection, while others less so. Nonetheless, all the projects are required to include an “Ethical Considerations” section in their final report. This section ideally reflects possible ethical issues that they came across working in their chosen project and the ways they mitigated such issues as well as issues that could be anticipated as emerging from the work that could be out of their control.

At the start of the module we have a general Critical Thinking and Data Ethics three-hour long lecture. The content is described below for those interested. Given that it is a data science module, the first half of the session thematically raises data related ethical questions and critical reflection while during the second half, the theme is ethics and AI, specifically, automated systems.

There are infinite various ways to approach this, a vast amount of material to include and many ways to design, frame and direct the conversation. Our specific way is simply one of them. It fits the module, the department, and the students’ backgrounds and aligns with the module aims and expected outcomes. These factors are likely to differ in other institutes and modules. If you find this helpful, that’s great. If not, we hope that this blogpost provided you with some food for thought.

The central content is thematized in two parts as follows. Read along if you are interested in the details. You can also email Abeba.birhane@ucdconnect.ie if you would like the slides.

Part I

-

Looking back: hidden history of data

-

Unquestioned assumptions and (mis)understandings of the nature of data: a critical look

- Data reflect objective truth:

- Data exist in a historical, societal, and cultural vacuum:

- The data scientist is often invisible in data science:

-

Correlation vs causation

-

GDPR

Part II

-

Bias: automated systems

-

Data for Good

The first challenge is to establish why critical thinking and data ethics is important for data scientists. This is the second year that this module is running and one of the lessons learned from last year is that not everybody is on board from the get-go with the need for critical thinking and data ethics for data science. Therefore, although it might seem obvious, it is important to try to get everyone on board before jumping in. This is essential for a productive discussion. The students are likely to engage and have an interest if they first and foremost see why it is important. Examples of previous and current data science related disasters, (Cambridge Analytica, Facebook, for example), other major computer science departments doing it, and the fact that “Ethical Considerations” need to be included in the student’s final report serve to get them on board.

Looking back: hidden history of data

With the convincing out of the way, a brief revisit of the dark history of data and the sciences, provides a vivid and gruesome example of the use and abuse of the most vulnerable members of society in the name of data for medical advancements. Nazi-era medical experiments serve as primary examples. Between 1933 – 1945, German anatomists over 31 departments, had accepted bodies of thousands of people killed by the Hitler regime. These bodies were dissected in order to study anatomy. The (in)famous Hermann Stieve (1886 – 1952) got his “material” i.e. people the Nazis sentenced to death for minor crimes such as looting, for research from Plötzensee Prison. Stieve’s medical practices are among the ethically harrowing. However, he is also seen as “a great anatomist who revolutionized gynaecology through his clinical-anatomical research.”

The question remains: how should we view research that is scientifically valuable but morally disturbing? This question elicits a great deal of discussion in class.

Ethical red-flags and horrifying consequences are much more visible and relatively immediate with medical anatomy research. Whereas with data and data driven automated decision makings, the effects and consequences are much more nuanced and invisible.

At this point, another open question is paused to the class: What makes identifying and mitigating ethical red flags in data science much more difficult and nuanced than those in medical sciences?

Unquestioned assumptions and (mis)understandings of the nature of data: a critical look

Data reflect objective truth:

The default thinking within data and computer science tends to assume that data are automatically objective. This persistent misconception that data are an objective form of information that we simply find “out there”, observe and record, obscures the fact that data can be as subjective as the humans finding and recording it. Far from reflecting objective truth, data are political, messy, often incomplete, sometimes fake, and full of complex human meanings. Gitelman (2013)’s book “Raw Data is an Oxymoron” is an excellent resource in this regard. “Data is anything but “raw”… we shouldn’t think of data as a natural resource but as a cultural one that needs to be generated, protected, and interpreted.”

Crawford also concisely summarizes the problem with the view of data as reflection of objective truth:

“Data and data sets are not objective; they are creations of human design. We give numbers their voice, draw inferences from them, and define their meaning through our interpretations. Hidden biases in both the collection and analysis stages present considerable risks, and are as important to the big-data equation as the numbers themselves.”

At almost every step of the data science process, the data scientist makes assumptions and decisions based on these assumptions. Consequently, any results that emerge are fundamentally biased by these assumptions. These assumptions might be reasonable or they might not. This means that data scientists must be transparent about these assumptions. The problem is that oftentimes, data scientists are neither clear about their assumptions nor think about them at all.

Data exist in a historical, societal, and cultural vacuum:

Far from reflecting objective truth, data often reflect historical inequalities, norms and cultural practices. Our codes then pick these inequalities and norms, which are taken as the “ground truth” and amplify them. As a result, women getting paid less than men, for example, comes to be taken as the norm and gets amplified by algorithmic systems. Amazon’s recently discontinued hiring AI is a primary example. The training data for Amazon’s hiring algorithm is historical data – CV’s submitted to the company over the previous 10 years. In this case, previous success is taken as indication of future success. And in the process, CV’s that didn’t fit the criteria for past success (women) were eliminated from consideration. This type of predictive system works under the assumption that the future looks like the past. However, this is problematic as people and societies change over time. Algorithmic decision making like that of Amazon’s create algorithmic driven determinism where people are deprived from being the exception to the rule.

The data scientist is often invisible in data science:

Due to the “view from nowhere” approach that most of data science operates under, the data scientist is almost always absent from data science. With the spotlight on the data scientist, we can examine how there’s always people making important choices including:

Which data to include and by default which to exclude, how data are weighed, analysed, manipulated and reported, and making important decisions such as how good “good enough” is for an algorithm to perform. And people are biased. We form stereotypes and rely on them as shortcuts to our day-to-day lives. For example, a CV with a white-sounding name will receive a different (more positive) response than the same CV with a black-sounding name. Women’s chance of being hired in symphony orchestras increases between 30% and 55% in blind auditions. Goldin & Rouse (1997). We can only see things from our own point of view, which is entangled in our history, cultural and social norms. Putting the spotlight on the data scientist allows us to acknowledge personal motivations, beliefs, values, and biases that directly or indirectly shape our scientific practices.

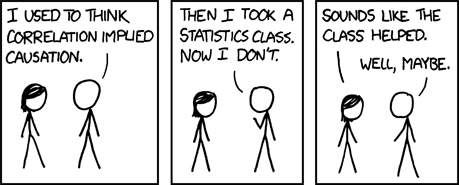

Correlation vs causation

The statement that “correlations is not causation” might seem an obvious statement but one that most us need to be regularly reminded. In this regard, Bradford Hill’s criteria of causation is a helpful framework to look at. Hill’s 9 principles – minimal conditions needed to establish a causal relationship, were originally developed as a research tool in the medical sciences. However, they are equally applicable and relevant to data scientists. Hill’s 9 principles are; strength, consistency, specificity, temporality, dose effect, plausibility, coherence, experiment, and analogy. The more criteria that are met, the more likely the relationship is causal. xkcd.com provides witty and entertaining comics for each of Hill’s criteria for causation.

GDPR

Ethical questions inevitably arise with all innovation. Unfortunately, they are often an afterthought and not anticipated and mitigated. As data scientists, the questions that we are trying to answer implicitly or explicitly intersect with the social, medical, political, psychological, and legal sphere. Ethical and responsible practices are not only personally expected but also legally required. To this end awareness and compliance of GDPR regulations is crucial when collecting, storing, and processing personal data. Students working with personal data are directed to the university’s GDPR Road Map.

Having said that, GDPR can only serve as a framework and is not the final answer that proves a clear black and white solution. We cannot comprehend what our data will reveal in conjunction with other data. Furthermore, privacy is not something we can always negotiate person by person but rather something that we need to look at as whole network. Look no further than the Strava debacle.

This is a murky and complex area and the idea is not to equip the students with the fine grained details of privacy or GDPR but rather to raise awareness.

Part II

Bias: automated systems

Can we solve problems stemming from human bias by turning decisions over to machines? In theory, more and more decisions increasingly handled by algorithms should mean that human biases and prejudices should be eliminated. Algorithms are, after all, “neutral” and “objective”. They apply the same rules to everybody regardless of race, gender, ethnicity or ability. The reality, however, is far from this. As O’Neil points out, automated systems only give us the illusion of neutrality. Case after case have demonstrated that automated systems, in fact, can become tools that perpetuate and amplify inequalities and unfairness. Examples include recidivism algorithms, hiring algorithms.

Decisions delivered from automated systems may not be as grave and immediate if these systems are recommending what books we might like to buy next based on our previous purchase. However, the stakes are much higher when automated systems are diagnosing illness or holding sway over a person’s job application or prison sentence.

O’Neil makes a powerful argument that the objectives of a mathematical model determine whether the model becomes a force for good or a tool that wields and perpetuates existing and historical bias. Automated systems (which are often developed by commercial firms for profit), often strive to optimize for efficiency and profit, which come at the cost of fairness. Take the (U.S) prison system for example. Questions such as how the prison system can be improved are almost never considered. Instead the goal seems to be to lock as many people away as possible. Consequently, algorithmic systems within the prison system strive to flag and lock people away that are deemed to likely reoffend.

The stream of data we produce serve as insights into our lives and behaviours. Instead of testing whether these insights and intuitions stand up to scientific scrutiny, the data we produce are used to justify the modellers’ intuitions and to reinforce pre-existing assumptions and prejudice. And the feedback loop goes on. Once again, associations are taken as evidence to justify pre-existing assumptions.

Algorithmic systems increasingly present new ways to sort, profile, exclude and discriminate within the complex social world. The opaque nature of these systems mean that we don’t know things have gone wrong until a big number of people, often society’s most vulnerable, are affected. We, therefore, need to anticipate all possible consequences of our work in advance before things go wrong. As we’ve seen in previous examples, algorithmic decision making is increasingly intersecting with the social sphere – blurring the boundaries between technology and society, public and private. As data scientists, working to solve society’s problems, understanding these complex and fuzzy boundaries and cultural, historical and social context of our data and algorithmic tools is crucial.

In domains such as medicine and psychology, where work has direct or indirect impact on the individual person or society, there often exist ethical frameworks in place. Ethics is an integral part of medical training, for example. Physicians are held to specific ethical standards through the practice of swearing the Hippocratic Oath and through various medical ethics boards.

At this stage another question is put forward to the class: Given that data scientists, like physicians, work to solve society’s problems, influencing it in the process, should data scientists then be held to the same standard as physicians?

Data for Good

Most of the content of this lecture contains either cautionary tales or warnings, which at times might dishearten students. This year we have added a section on “Data for good” towards the end. This helps conclude the course with a bit of a positive note by illustrating how data science is being used for social good.

The Greena Davis Institute in collaboration with Google.org is using data to identify gender bias within the film industry. They analysed the 100 highest grossing (US domestic) live-action films from 2014, 2015, and 2016. The findings show that men are seen and heard nearly twice as often as women. Such work is crucial for raising awareness of the blind spots in media and encourages storytellers to be inclusive.

“Data helps us understand what it is we need to encourage creators to do. Small things can have a huge impact.” Geena Davis, Academy Award-winning actor, founder and chair of the Geena Davis Institute on Gender in Media

Similarly, the Troll Patrol project by Amnesty International and Element AI, studied online abuse against women. They surveyed millions of tweets received by 778 journalists and politicians, UK and US throughout 2017. They commissioned an online polling of women in 8 countries about their experiences of abuse on social media. Over 6,500 volunteers from around the world took part, analysing 288,000 tweets to create a labelled dataset of abusive or problematic content. The findings show that 7.1% of tweets sent to the women in the study were “problematic” or “abusive”. This amounts to 1.1 million tweets mentioning 778 women across the year, or one every 30 seconds. Furthermore, women of colour, (black, Asian, Latinx and mixed-race women) were 34% more likely to be mentioned in abusive or problematic tweets than white women. Black women were disproportionately targeted, being 84% more likely than white women to be mentioned in abusive or problematic tweets.

This sums up how we currently organize the content and the kind of tone we are aiming for. The ambition is a fundamental rethinking of taken for granted assumptions and to think of ethical data science in a broader sense as work that potentially affects society rather than simply as “not using personal information for research”. Whether we succeed or not is a different matter that remains to be seen. Furthermore, this is a fast-moving field where new impacts of technology as well as new ways of thinking about ethics are continually changing. Taking this and the fact that we are continually incorporating student feedbacks and what has (not)worked into account, next year’s content could possibly look slightly different.

Reblogged this on Ethics of Knowledge and commented:

“Can we solve problems stemming from human bias by turning decisions over to machines? In theory, more and more decisions increasingly handled by algorithms should mean that human biases and prejudices should be eliminated. Algorithms are, after all, “neutral” and “objective”. They apply the same rules to everybody regardless of race, gender, ethnicity or ability. The reality, however, is far from this”.

LikeLike

This part: “Case after case have demonstrated that automated systems, in fact, can become tools that perpetuate and amplify inequalities and unfairness” is crucial. I think first, this fact needs to be better recognized. And second, data science teams need to more fully account for how they made choices around using data with embedded biases. If a data source or a specific feature is borne out of a structural inequality, should that data simply discarded? What if inclusion of that feature makes the algorithm more accurate? Who makes the choice to have a less valid model in exchange for a more bias model?

Jesse Rio Russell, PhD

http://www.bprac.com

LikeLike