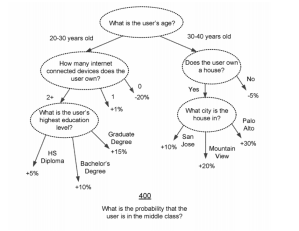

We live in the age of algorithms. Where the internet is, algorithms are. The Apps on our phones are results of algorithms. The GPS system can bring us from point A to point B thanks to algorithms. More and more decisions affecting our daily lives are handed over to automation. Whether we are applying for college, seeking jobs, or taking loans, mathematical models are increasingly involved with the decision makings. They pervade schools, the courts, the workplace, and even the voting process. We are continually ranked, categorized, and scored in hundreds of models, on the basis of our revealed preferences and patterns; as shoppers and couch potatoes, as patients and loan applicants, and very little of this do we see – even in applications that we happily sign up for.

More and more decisions being handed over to algorithms should in theory mean less human biases and prejudices. Algorithms are, after all, “neutral” and “objective”. They apply the same rules to everybody regardless of race, gender, ethnicity or ability. However, this couldn’t be far from the truth. In fact, mathematical models can be, and in some cases have been, tools that further inequality and unfairness. O’Neil calls these kinds of models Weapons of Math Destruction (WMD). WMDs are biased, unfair and ubiquitous. They encode poisonous prejudices from past records and work against society’s most vulnerable such as racial and ethnic minorities, low-wage workers, and women. It is as if these models were designed expressly to punish and to keep them down. As the world of data continues to expand, each of us producing ever-growing streams of updates about our lives, so do prejudice and unfairness.

Mathematical models have revolutionized the world and efficiency is their hallmark and sure, they aren’t just tools that create and distribute bias, unfairness and inequality. In fact, models, by their nature are neither good nor bad, neither fair nor unfair, neither moral nor immoral – they simply are tools. The sports domain is a good example where mathematical models are a force for good. For some of the world’s most competitive baseball teams today, competitive advantages and wins depend on mathematical models. Managers make decisions that sometimes involve moving players across the field based on analysis of historical data and current situation and calculate the positioning that is associated with the highest probability of success.

There are crucial differences, however, between models such as those used by baseball managers and WMDs. While the former is transparent, and constantly updates its model with feedbacks, the latter by contrast are opaque and inscrutable black-boxes. Furthermore, while the baseball analytics engines manage individuals, each one potentially worth millions of dollars, companies hiring minimum wage workers, by contrast, are managing herds. Their objectives are optimizing profits so they slash their expenses by replacing human resources professionals with automated systems that filter large populations into manageable groups. Unlike the baseball models, these companies have little reason – say plummeting productivity – to tweak their filtering model. O’Neil’s primary focus in the book are models that are opaque and inscrutable, those used within powerful institutions and industries, which create and perpetuate inequalities – WMDs – “The dark side of Big Data”!

The book contains crucial insights (or haunting warnings, depending on how you choose to approach it) to the catastrophic directions mathematical models used in the social sphere are heading. And it couldn’t come from a more credible and experienced expert than a Harvard mathematician who then went to work as quant for D. E. Shaw, a leading hedge fund, and an experienced data scientist, among other things.

The book contains crucial insights (or haunting warnings, depending on how you choose to approach it) to the catastrophic directions mathematical models used in the social sphere are heading. And it couldn’t come from a more credible and experienced expert than a Harvard mathematician who then went to work as quant for D. E. Shaw, a leading hedge fund, and an experienced data scientist, among other things.

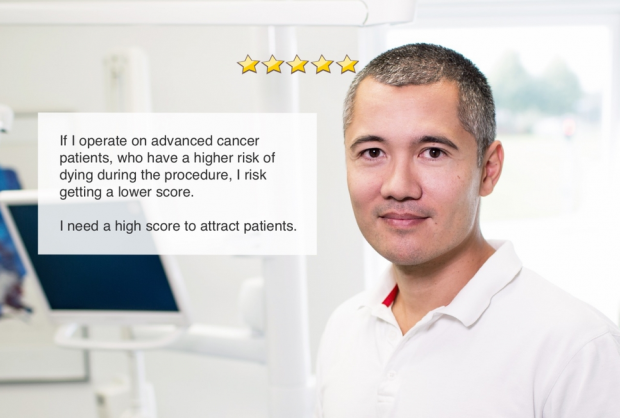

One of the most persistent themes of O’Neil’s book is that the central objectives of a given model are crucial. In fact, objectives determine whether a model becomes a tool that helps the vulnerable or one that is used to punish them. WMDs objectives are often to optimize efficiency and profit, not justice. This, of course, is the nature of capitalism. And WMDs efficiency comes at the cost of fairness – they become biased, unfair, and dangerous. The destructive loop goes around and around and in the process, models become more and more unfair.

Legal traditions lean strongly towards fairness … WMDs, by contrast, tend to favour efficiency. By their very nature, they feed on data that can be measured and counted. But fairness is squishy and hard to quantify. It is a concept. And computers, for all their advances in language and logic, still struggle mightily with concepts. They “understand” beauty only as a word associated with the Grand Canyon, ocean sunsets, and grooming tips in Vogue magazine. They try in vain to measure “friendship” by counting likes and connections on Facebook. And the concept of fairness utterly escapes them. Programmers don’t know how to code for it, and few of their bosses ask them too. So fairness isn’t calculated into WMDs and the result is massive, industrial production of unfairness. If you think of a WMD as a factory, unfairness is the black stuff belching out of the smoke stacks. It’s an emission, a toxic one. [94-5]

The prison system is a startling example where WMDs are increasingly used to further reinforce structural inequalities and prejudices. In the US, for example, those imprisoned are disproportionately poor and of colour. Being a black male in the US makes you nearly seven times more likely to be imprisoned than if you were a white male. Are such convictions fair? Many different lines of evidence suggest otherwise. Black people are arrested more often, judged guilty more often, treated more harshly by correctional officers, and serve longer sentences than white people who have committed the same crime. Black imprisonment rate for drug offenses, for example, is 5.8 times higher than it is for whites, despite a roughly comparable prevalence of drug use.

Prison systems which are awash in data hardly carry out important research such as why non-white prisoners from poor neighbourhoods are more likely to commit crimes or what the alternative ways of looking at the same data are. Instead, they use data to justify the workings of the system and further punish those that are already at a disadvantage. Questioning the workings of the system or enquiries on how the prison system could be improved are almost never considered. If, for example, building trust were the objective, an arrest may well become the last resort, not the first. Trust, like fairness, O’Neil explains, is hard to quantify and presents a great challenge to modellers even when the intentions are there to consider such concept as part of the objective.

Sadly, it’s far simpler to keep counting arrests, to build models that assume we’re birds of a feather and treat us such… Innocent people surrounded by criminals get treated badly. And criminals surrounded by law-abiding public get a pass. And because of the strong correlation between poverty and reported crime, the poor continue to get caught up in these digital dragnets. The rest of us barely have to think about them. [104]

Insofar as these models rely on barely tested insights, they are in a sense not that different to phrenology – digital phrenology. Phrenology, the practice of using outer appearance to infer inner character, which in the past justified slavery and genocide has been outlawed and is considered pseudoscience today. However, phrenology and scientific racism are entering a new era with the appearance of justified “objectivity” with machine-learned models. “Scientific” criminological approaches now claim to “produce evidence for the validity of automated face-induced inference on criminality”. However, what these machine-learned “criminal judgements” pick up on, more than anything, is systematic unfairness and human bias embedded in historical data.

A model that profiles us by our circumstances helps create the environment that justifies its assumptions. The stream of data we produce serve as insights into our lives and behaviours. Instead of testing whether these insights stand up to scientific scrutiny, the data we produce are used to justify the modellers’ assumptions and to reinforce per-existing prejudice. And the feedback loop goes on.

When I consider the sloppy and self-serving ways that companies use data, I am often reminded of phrenology… Phrenology was a model that relied on pseudo-scientific nonsense to make authoritative pronouncements, and for decades it went untested. Big Data can fall into the same trap. [121-2]

Hoffman in 1896 published a 330-page report where he used exhaustive statistics to support a claim as pseudo-scientific and dangerous as phrenology. He made the case that the lives of black Americans were so precarious that the entire race was uninsurable. However, not only were Hoffman’s statistics erroneously flawed, like many of WMDs O’Neil discusses throughout the book, he also confused causation for correlation. The voluminous data he gathered served only to confirm his thesis: race is a powerful predictor of life expectancy. Furthermore, Hoffman failed to separate the “Black” population into different geographical, social or economic cohorts blindly assuming that the whole “Black” population is a homogeneous group.

This cruel industry has now been outlawed. Nonetheless, the unfair and discriminatory practices remain and are still practiced but in a far subtler form – they are now coded into the latest generations of WMDs and obfuscated under complex mathematics. Like Hoffman, the creators of these new models confuse correlation with causation and they punish the struggling classes and racial and ethnic minorities. And they back up their analysis with realms of statistics, which give them the studied air of “objective science”.

What is even more frightening is that as oceans of behavioural data continue to feed straight into artificial intelligence systems, this, to the most part will, unfortunately, remain a black box to the human eye. We will rarely learn about the classes that we have been categorized into or why we were put there, and, unfortunately, these opaque models are as much a black-box to those who design them. In any case, many companies would go out of their way to hide the results of their models, and even their existence.

In the era of machine intelligence, most of the variables will remain a mystery... automatic programs will increasingly determine how we are treated by other machines, the ones that choose the ads we see, set prices for us, line us up for a dermatologist appointment, or map our routes. They will be highly efficient, seemingly arbitrary, and utterly unaccountable. No one will understand their logic or be able to explain it. If we don’t wrest back a measure of control, these future WMDs will feel mysterious and powerful. They’ll have their way with us, and we’ll barely know it is happening. [173]

In the current insurance system, (at least as far as the US is concerned) the auto insurers’ tracking systems which provide insurers with more information enabling them to create more powerful predictions, are opt-in. Only those willing to be tracked have to turn on their black boxes. Those that do turn them on get rewarded with discounts where the rest subsidize those discounts with higher rates. Insurers who squeeze out the most intelligence from this information, turning it into profits, will come out on top. This, unfortunately, undermines the whole idea of collectivization of risk on which insurance systems are based. The more insurers benefit from such data, the more of it they demand, gradually making trackers the norm. Consumers who want to withhold all but the essential information from their insurers will pay a premium. Privacy, increasingly, will come at a premium cost. A recently approved US bill illustrates just that. This bill would expand the reach of “Wellness Programs” to include genetic screening of employees and their dependents and increase the financial penalties for those who choose not to participate.

Being poor in a world of WMDs is getting more and more dangerous and expensive. Even privacy is increasingly becoming a luxury that only the wealthy can afford. In a world which O’Neil calls a ‘data economy’, where artificial intelligence systems are hungry for our data, we are left with very few options but to produce and share as much data about our lives as possible. In the process, we are (implicitly or explicitly) coerced into self-monitoring and self-discipline. We are pressured into conforming to ideal bodies and “normal” health statuses as dictated by organizations and institutions that handle and manage our social relations, such as, our health insurances. Raley (2013) refers to this as dataveillance: a form of continuous surveillance through the use of (meta)data. Ever growing flow of data, including data pouring in from the Internet of Things – the Fitbits, Apple Watches, and other sensors that relay updates on how our bodies are functioning, continue to contribute towards this “dataveillance”.

One might argue that helping people deal with their weight and health issues isn’t such a bad thing and that would be a reasonable argument. However, the key question here, as O’Neil points out, is whether this is an offer or a command. Using flawed statistics like the BMI, which O’Neil calls “mathematical snake oil”, corporates dictate what the ideal health and body looks like. They infringe on our freedom as they mould our health and body ideals. They punish those that they don’t like to look at and reward those that fit their ideals. Such exploitation are disguised as scientific and are legitimized through the use of seemingly scientific numerical scores such as the BMI. The BMI, kg/m2 (a person’s weight (kg) over height (m) squared), is only a crude numerical approximation for physical fitness. And since the “average” man underpins its statistical scores, it is more likely to conclude that women are “overweight” – after all, we are not “average” men. Even worse, black women, who often have higher BMIs, pay the heaviest penalties.

The control of great amounts of data and the race to build powerful algorithms is a fight for political power. O’Neil’s breathtakingly critical look at corporations like Facebook, Apple, Google, and Amazon illustrates this. Although these powerful corporations are usually focused on making money, their profits are tightly linked to government policies which makes the issue essentially a political one.

These corporations have significant amounts of power and a great amount of information on humanity, and with that, the means to steer us in any way they choose. The activity of a single Facebook algorithm on Election Day could not only change the balance of Congress, but also potentially decide the presidency. When you scroll through your Facebook updates, what appears on your screen is anything but neutral – your newsfeed is censored. Facebook’s algorithms decided whether you see bombed Palestines or mourning Israelis, a policeman rescuing a baby or battling a protester. One might argue that television news has always done the same and this is nothing new. CNN, for example, chooses to cover a certain story from a certain perspective, in a certain way. However, the crucial difference is, with CNN, the editorial decision is clear on the record. We can pinpoint to individual people as responsible and accountable for any given decision and the public can debate whether that decision is the right one. Facebook on the other hand, O’Neil puts it, is more like the “Wizard of Oz” — we do not see the human beings involved. With its enormous power, Facebook can affect what we learn, how we feel, and whether we vote – and we are barely aware of any of it. What we know about Facebook, like other internet giants, comes mostly from the tiny proportion of their research that they choose to publish.

In a society where money buys influence, these WMD victims are nearly voiceless. Most are disenfranchised politically. The poor are hit the hardest and all too often blamed for their poverty, their bad schools, and the crime that afflicts their neighbourhoods. They, for the most part, lack economic power, access to lawyers, or well-funded political organizations to fight their battles. From bringing down minorities’ credit scores to sexism in the workplace, WMDs serve as tools. The result is widespread damage that all too often passes for inevitability.

Again, it is easy to point out that injustice, whether based on bias or greed, has been with us forever and WMDs are no worse than the human nastiness of the recent past. As with the above examples, the difference is transparency and accountability. Human decision making has one chief virtue. It can evolve. As we learn and adapt, we change. Automated systems, especially those O’Neil classifies as WMD, by contrast, stay stuck in the time until engineers dive in to change them.

If Big Data college application model had established itself in the early 1960s, we still wouldn’t have many women going to college, because it would have been trained largely on successful men. [204]

Rest assured, the book is not all doom and gloom or that all mathematical models are biased and unfair. In fact, O’Neil provides plenty of examples where models are used for good and models that have the potential to be great.

Whether a model becomes a tool to help the vulnerable or a weapon to inflict injustice, as O’Neil, time and time again emphasizes, comes down to its central objectives. Mathematical models can sift through data to locate people who are likely to face challenges, whether from crime, poverty, or education. The kinds of objectives adopted dictate whether such intelligence is used to reject or punish those that are already vulnerable or to reach out to them with the resources they need. So long as the objectives remain on maximizing profit, or excluding as many applicants as possible, or to locking up as many offenders as possible, these models serve as weapons that further inequalities and unfairness. Change that objective from leeching off people to reaching out to them, and a WMD is disarmed — and can even become a force of good. The process begins with the modellers themselves. Like doctors, data scientists should pledge a Hippocratic Oath, one that focuses on the possible misuse and misinterpretation of their models. Additionally, initiatives such as the Algorithmic Justice League, which aim to increase awareness of algorithmic bias, provide space for individuals to report such biases.

Opaqueness is a common feature of WMDs. People have been dismissed from work, sent to prison, or denied loans due to their algorithmic credit scores with no explanation as to how or why. The more we are aware of their opaqueness, the better chance we have in demanding transparency and accountability and this begins by making ourselves aware of the works of experts like O’Neil. This is not a book only for those working in data science, machine learning or other related fields, but one that everyone needs to read. If you are a modeller, this book should encourage you to zoom out, think whether there are individuals behind the data points that your algorithms manipulate, and think about the big questions such as the objectives behind your code. Almost everyone, to a greater or lesser extent, is part of the growing world of ‘data economy’. The more awareness there is of the dark side of these machines, the better equipped we are to ask questions, to demand answers from those behind the machines that decide our fate.

AI is often thought of as a recent development, or worse, as futuristic, something that will happen in the far future. We tend to forget that dreams, aspirations and fascinations with AI go back in history back to antiquity. In this regard, Rene Descartes’s

AI is often thought of as a recent development, or worse, as futuristic, something that will happen in the far future. We tend to forget that dreams, aspirations and fascinations with AI go back in history back to antiquity. In this regard, Rene Descartes’s  The ninth century Persian mathematician Muḥammad ibn Mūsā al-Khwārizmī who gave us one of the earliest mathematical algorithms. The word “algorithm” comes from mispronunciation of his name.

The ninth century Persian mathematician Muḥammad ibn Mūsā al-Khwārizmī who gave us one of the earliest mathematical algorithms. The word “algorithm” comes from mispronunciation of his name.

The book contains crucial insights (or haunting warnings, depending on how you choose to approach it) to the catastrophic directions mathematical models used in the social sphere are heading. And it couldn’t come from a more credible and experienced expert than a Harvard mathematician who then went to work as quant for D. E. Shaw, a leading hedge fund, and an experienced data scientist, among other things.

The book contains crucial insights (or haunting warnings, depending on how you choose to approach it) to the catastrophic directions mathematical models used in the social sphere are heading. And it couldn’t come from a more credible and experienced expert than a Harvard mathematician who then went to work as quant for D. E. Shaw, a leading hedge fund, and an experienced data scientist, among other things.